Something that I was seeing during the first event of the Scripting Games was the use (or misuse) of implementing pipeline support for a parameter in a function or script. While most people did do this correctly, I did see a decent number of people do some things that would never work at all if someone attempted to pipe data into their function. I want to clarify/expand on some things that I talked about in a previous article that should be done and why some of the methods being used will not work like you think.

We all know that being able to pass objects (not text!) through the pipeline with PowerShell is just amazing and very powerful. Doing this by taking output from another cmdlet and then streaming it into another command which allows us to chain commands very seamlessly without effort. Doing this will also throttle the amount of memory that is being allocated (in most cases) that the current session is using for the commands.

Getting started…

Want to know more about the pipeline? Then do the right thing and explore PowerShell’s awesome help system with the following command:

Get-Help about_pipelines

What you may not know is how to properly implement this to get the benefit of the pipeline in your functions. And by this, I am talking about the Begin, Process and End blocks in the code. I am going to show initially some mistakes that could be made with this implementation and how to overcome them.

First off, how do I allow my parameter to accept pipeline input? By specifying one of the following parameter attributes:

- ValueFromPipeline

- Accepts values of the same type expected by the parameter or that can be converted to the type that the parameter is expecting.

- ValueFromPipelineByPropertyName

- Accepts values of the same type expected by the parameter but must also be of the same name as the parameter accepting pipeline input.

Now with that out of the way, lets look at what you might expect to see:

Param (

[parameter(ValueFromPipeline=$True)]

[string[]]$Computername

)

The Computername parameter allows for pipeline support by value of something that is a string or a collection of strings. If we were accepting pipeline that has the same name as Computername (or any defined Aliases with the [Alias()] attribute, we would use the following:

Param (

[parameter(ValueFromPipelineByPropertyName)]

[Alias('IPAddress','__Server','CN')]

[string[]]$Computername

)

This allows me to do something like pipe the output of a WMI query using Get-WMIObject into a function and it would grab the __Server property of the object and use it in the pipeline of the function. Pretty cool stuff! Please make sure that if you use the *ByPropertyName attribute, that there is actually a property in the object either supports it or you are using an Alias attribute that has the property that will map to whatever the incoming object has.

Now on to the main point of this article which is setting up the guts of the function to process this correctly.

Begin, Process and End with no pipeline support

First off, if you are not accepting pipeline input, you really have no need to use Begin, Process and End because frankly, it is doing nothing for you other than just taking up space in your code. I know that people may be doing this as a way to organize their code, but there is a better way that I will show you in a moment.

Function Get-Something {

[cmdletbinding()]

Param (

[parameter()]

[string[]]$Computername

)

Begin {

Write-Verbose "Initialize stuff in Begin block"

}

Process {

Write-Verbose "Stuff in Process block to perform"

ForEach ($Computer in $Computername) {

$Computer

}

}

End {

Write-Verbose "Final work in End block"

}

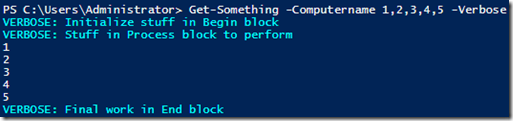

}

This is really a false sense of the blocks working as they are just going in the order provided in the code. Instead, take advantage of the PowerShell V3 ISE and its ability to use code folding with regions to organize your code accordingly.

Function Get-Something {

[cmdletbinding()]

Param (

[parameter()]

[string[]]$Computername

)

#region Initialization code

Write-Verbose "Initialize stuff in Begin block"

#endregion Initialization code

#region Process data

Write-Verbose "Stuff in Process block to perform"

ForEach ($Computer in $Computername) {

$Computer

}

#endregion Process data

#region Finalize everything

Write-Verbose "Final work in End block"

#endregion Finalize everything

}

Same output, but now without the Begin, Process and End blocks. I’ll repeat it again, if you don’t allow for pipeline input, then just stick with using #region/#endregion tags to organize your code (you should also do this regardless of pipeline input or not).

Pipeline support with no Process block support

Ok, so what happens if we do specify a parameter that has pipeline support but has NO Process block? This was something common I saw during Event 1 and will show you what happens when trying to run a command that is setup this way.

Function Get-Something {

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True)]

[string[]]$Computername

)

Write-Verbose "Initialize stuff in Begin block"

Write-Verbose "Stuff in Process block to perform"

$Computername

Write-Verbose "Final work in End block"

}

What do you think will happen when I run this with pipeline input? Will it process everything? Will it process nothing? Lets find out!

If you thought that it would only show the last item in the pipeline, then you are the winner! What is happening is that without the Process block, the behavior is similar to what we would expect from the End block.

The way to do it…

So what is the proper way to accomplish this, let me show you that now with the following example.

Function Get-Something {

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True)]

[string[]]$Computername

)

Begin {

Write-Verbose "Initialize stuff in Begin block"

}

Process {

Write-Verbose "Stuff in Process block to perform"

$Computername

}

End {

Write-Verbose "Final work in End block"

}

}

Works like a champ now. But take a look at something here. The Write-Verbose statement runs for each item that is processed in the pipeline. What does this mean? Well, it means that you have to be careful about what is put in the Process block as it will run each and every time for each item being passed through the pipeline. In other words, don’t try to create the same file to write to with output or creating your main array that will hold data in it such as this example:

Function Get-Something {

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True)]

[string[]]$Computername

)

Begin {

Write-Verbose "Initialize stuff in Begin block"

}

Process {

$report = @()

Write-Verbose "Stuff in Process block to perform"

$report += $Computername

}

End {

Write-Verbose "Final work in End block"

$Report

}

}

All of that data collected was overwritten with each item. I also saw something similar to this on a few submissions during Event 1. Be careful about not making this mistake!

Do I need all of these Begin, Process and End blocks?

With all of this information presented to you, does this mean that you only have to specify a Process block in your function? Well, yes and no. Yes if all you have is pipeline stuff to process and have no need to initialize anything else in the beginning. If you do have things to spin up, then add a Begin block to handle that, otherwise your function will fail when being run like this:

Function Get-Something {

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True)]

[string[]]$Computername

)

Write-Verbose "Initialize stuff in Begin block"

Process {

Write-Verbose "Stuff in Process block to perform"

$Computername

}

}

The function will actually load into memory without issue, but check out what happens when you attempt to run the function.

All seems well until we get to the Process piece. Instead of being read as a Process block, it is misinterpreted as Get-Process which obviously fails. Point here is keep everything in the Begin,Process and End blocks if you have need for them.

Function Get-Something {

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True)]

[string[]]$Computername

)

Begin {

Write-Verbose "Initialize stuff in Begin block"

}

Process {

Write-Verbose "Stuff in Process block to perform"

$Computername

}

}

Much better!

Multiple parameters that accept pipeline input

Now for something a little different. I saw at least one submission that had multiple parameters with pipeline input and wondered how was that going to work (turns out not so well!). See this example:

Function Get-Something {

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True)]

[string[]]$Name,

[parameter(ValueFromPipeline=$True)]

[string[]]$Directory

)

Begin {

Write-Verbose "Initialize stuff in Begin block"

}

Process {

Write-Verbose "Process block"

Write-Host "Name: $Name"

Write-Host "Directory: $Directory"

}

End {

Write-Verbose "Final work in End block"

$Report

}

}

Instead of the usual numbers into the pipeline, I am going to use Get-ChildItem and pipe that into my function to see what happens.

Weird, isn’t it? It will process the same value for each parameter just because it accepted pipeline input. How do we get around this issue? Use the PipelineValueByPropertyName attribute instead.

Function Get-Something {

[cmdletbinding()]

Param (

[parameter(ValueFromPipelineByPropertyName=$True)]

[string[]]$Name,

[parameter(ValueFromPipelineByPropertyName=$True)]

[string[]]$Directory

)

Begin {

Write-Verbose "Initialize stuff in Begin block"

}

Process {

Write-Verbose "Process block"

Write-Host "Name: $Name"

Write-Host "Directory: $Directory"

}

End {

Write-Verbose "Final work in End block"

$Report

}

}

Now we are able to pull two separate values with 2 parameters that accept pipeline input. Another option would be to use ParameterSets, but that would mean that you would only have one parameter or the other to accept pipeline input and wouldn’t have the output that I have above allowing the use of multiple parameters to accept input AND use that in the function side by side.

One last thing, take care when using both ValueFromPipeline and …ByPropertyName with multiple parameters as it can cause some craziness in the output.

Function Get-Something {

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True,ValueFromPipelineByPropertyName=$True)]

[string[]]$Name,

[parameter(ValueFromPipeline=$True,ValueFromPipelineByPropertyName=$True)]

[string[]]$Directory

)

Begin {

Write-Verbose "Initialize stuff in Begin block"

}

Process {

Write-Verbose "Process block"

Write-Host "Name: $Name"

Write-Host "Directory: $Directory"

}

End {

Write-Verbose "Final work in End block"

}

}

In fact, this completely freaks out the Directory parameter and doesn’t actually bind to anything. This is due to the order of binding when you use both of these attributes.

Order of Parameter Binding Process From Pipeline

- Bind parameter by Value with same Type (No Coercion)

- Bind parameter by PropertyName with same Type (No Coercion)

- Bind parameter by Value with type conversion (Coercion)

- Bind parameter by PropertyName with type conversion (Coercion)

You can use Trace-Command to dig deeper into this and really see what is happening. Working with Trace-Command can be complicated and reading all of the output can certainly be overwhelming, so use at your own discretion!

This is a little bonus content on working with Trace-Command and seeing where the parameter binding is taking place as well as when Coercion and No Coercion is taking place. I’ll be covering 6 one-liners to highlight specific items with the parameter binding.

The baseline that I will be using is for a timestamp that is a string and then another object that has a [datetime] type that will be piped into 5 functions to show each method of binding.

#String time

$nonType = New-Object PSObject -prop @{Datetime = "5:00 PM"}

#[datetime] type

$Type = New-Object PSObject -prop @{Datetime = [datetime]"5:00 PM"}

Looking at how parameter binding handles different types

Here we will look at a simple function that accepts pipeline input by PropertyName to handle incoming data.

Function Get-Something_PropName {

[cmdletbinding()]

Param (

[parameter(ValueFromPipelineByPropertyName=$True)]

[datetime[]]$Datetime

)

Process {$Datetime}

}

First lets run my variable with the Type [datetime] already defined and see where the parameter binding takes place:

Trace-Command parameterbinding {$Type | get-Something_PropName} -PSHost

You can see that it started out at line 5 by first seeing if it can pass the NO COERCION with the ByPropertyName attribute by validating that it is the type of [datetime] with the result being SUCCESSFUL.

Next up: the non-type property for the datetime parameter.

Trace-Command parameterbinding {$nonType | get-Something_PropName} -PSHost

Remember where the NO COERCION worked on the last run because the property was of the same type as the parameter requirement? Well, it doesn’t work out so well with my string value of “5:00 PM”. You can see where it doesn’t pass with a SKIPPED. Next up is the attempt to cast the input (COERCION) as the [datetime] type so it can match what the $DateTime parameter is requiring. This is done using the [System.Management.Automation.ArgumentTypeConverterAttribute] and in this case, it is SUCCESSFUL.

As a side note, I will be using the $nonType variable from here on out to show each time how it fails the NO COERCION attempt before the COERCION attempt.

Working with the [Alias()] attribute

Writing advanced functions means that support pipelining means also potentially using the [Alias()] parameter attribute to handle other properties that the parameter doesn’t have. This is more important when working with ByPropertyName.

Function Get-Something_PropName_NoAlias {

[cmdletbinding()]

Param (

[parameter(ValueFromPipelineByPropertyName=$True)]

[datetime[]]$Date

)

Process {$Date}

}

Trace-Command parameterbinding {$nonType | get-Something_PropName_NoAlias} -PSHost

Well, that is certainly interesting. If you look at line 4 here, it shows an arg of System.Management.Automation.PSCustomObject which isn’t all that useful. This is because the property being passed is DateTime while the parameter of this function is Date. The PropertyByName completely fails because it has no idea about the incoming data. So will ByValue work instead? Lets find out.

Trace-Command parameterbinding {$nonType | get-Something_Value_NoAlias} -PSHost

A little better this time around, but still a failure. Since it is ByValue, the parameter doesn’t care about what the name is of the object being passed through. It does see the input as a hash table with the data viewable, but still fails because it is neither the type of [datetime] nor can it be converted to the type as well. Just for run, lets pass a single integer into this and see how it works out.

Trace-Command parameterbinding {1 | get-Something_Value_NoAlias} -PSHost

Obviously it was never going to be of the [datetime] type, but it was easily converted into a [datetime] type object so it was able to bind to the parameter even without the alias attribute.

Ok, now we are going to add an Alias attribute for ‘DateTime’ to handle the incoming object.

Function Get-Something_PropName_Alias {

[cmdletbinding()]

Param (

[parameter(ValueFromPipelineByPropertyName=$True)]

[Alias('DateTime')]

[datetime[]]$Date

)

Process {$Date}

}

Trace-Command parameterbinding {$nonType | get-Something_PropName_Alias} -PSHost

As expected with the nontype input, the first check fails and then, thanks to the alias that handles the DateTime parameter, is successful with the COERCION check. Rather than show what would happen with the ByValue attempt, what do you think will happen this time around? HINT: History will repeat itself.

Working with both ByPropertyName and ByValue and Aliases

Up until now, I have been working with either ByValue or ByPropertyName, but never actually combining both into a function. That changes with the following example. Here I will have both configured as well as setting an Alias to show what happens both with the nontype input.

Function Get-Something_PropName_Value_Alias {

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True,ValueFromPipelineByPropertyName=$True)]

[Alias("Datetime")]

[datetime]$Date

)

Process {$Date}

}

This shows a perfect example of the list I showed on how the parameter attempts to bind the incoming data. First it attempts the ByValue and ByPropertyName with NO COERCION (matching the type of object to parameter) and then proceeding to the type conversion (COERCION) with ByPropertyName before finally succeeding with the ByValue type conversion.

Doing what I did earlier by adding a 1 instead of the $nonType shows a different result by succeeding on the ValueFromPipeline WITH COERCION.

If using No Aliases in your function for parameters, expect some issues as well. If you are passing an object into your function that doesn’t have the same property name as your parameter, then it will fail regardless of how the pipeline attributes are set. The only way it would succeed is if you pass a single object (vs. an object that has multiple properties) that will work for the ByValue and is either of the same type or can be converted to the required type.

That wraps up this post on implementing pipeline support as well as taking a swim into using Trace-Command to debug parameter binding. Hopefully this has provided you enough information to feel better prepared to implementing pipeline support as well as troubleshooting when it fails.

Here’s a weird one. If you have two parameters, and one is byvalue and one is bypropertyname, the byvalue one will take precedence and will convert the hash table of the other parameter to a string.

myscript.ps1 contains:

[cmdletbinding()]

Param (

[parameter(ValueFromPipeline=$True)]

[string]$string1=’string1′,

[parameter(ValueFromPipelineByPropertyName=$True)]

[string]$string2

)

Process {

“string1 is $string1”

“string2 is $string2”

}

Then I run:

[pscustomobject]@{string2=’string2′} | myscript

string1 is @{string2=string2}

string2 is string2

$string1 becomes ‘@{string2=string2}’, the string version of the hash, even if it has a default value of ‘string1’.

Much Appreciated

Great Article and I love your website. I use it all the time. I do have a question that I need some clarification on.

According to MSDN’s official documentation on ValueFromPipelineByPropertyName Argument on the about_functions_advanced_parameters site and/or in the help in PowerShell, it states that this argument indicates that the parameter accepts input from a property of a pipeline object and the object “property” must have the same name or alias as the parameter. If were to type:

Get-Service -Name “XblGameSave” -ComputerName $env:COMPUTERNAME | Set-Service -Status Stopped

how is this possible with the ComputerName parameter not being an actual property of the Service Controller Object but the parameter has an attribute of ValueFromPipelineByPropertyName? Is it because the argument parameter has the same alias or “name” in both cmdlets? I just want to make sure I am not over-thinking it. Thanks

I forgot to add that yes, I know MachineName is the actual property of a Service Controller but when dealing with ValueFromPipelineByPropertyName, I still want to know how is it possible without having to try to attempt any calculated properties and mess with the ETS to update the type data.

Very helpful, I’ve been looking a long time for an example of [parameter(ValueFromPipeline=$True,ValueFromPipelineByPropertyName=$True)]

Awesome post !

Found this website so useful that I just added it to my newsfeed so that I won’t miss any new post 🙂

Pingback: Two Ways To Accept Pipeline Input In PowerShell - Windows Wide Open

I have a function that I want to return the pipeline back to the calling script. How do I do that?

Thanks for this detailed article.

It is clear with easy to read with nice examples/screenshots.

I will now use pipelines much better.

Very thorough. Good work. Appreciate the handy reference.

Thank you! I appreciate the kind words.

Note that if you use [Alias(“propName”)], it is case sensitive.

That seems like a bug to me if that is still happening. In my opinion, PowerShell should not be case sensitive with anything related to parameters, to include aliases. Do you recall what version that you experienced this on?

question – If I am using Invoke-Command (w/o foreach) which executes the code simultaneously on multiple servers then do I still need the “process” block when “Valuefrompipeline=$true”. ? I did not find it failing or am I mistaken ?

You must have a Process block if you are supporting the ValueFromPipeline* attributes, otherwise it will not work properly.

Wow, thanks for explaining this so thoroughly, excellent!

Pingback: Building PowerShell Functions – Best Practices | rambling cookie monster

Could you explain why “ForEach” is necessary in “Process” block?

Process {

Write-Verbose “Stuff in Process block to perform”

ForEach ($Computer in $Computername) {

$Computer

}

# if I do this, seems i still get the same result?

ForEach ($Computer in $Computername) {

Write-Verbose “Stuff in Process block to perform”

$Computer

}

Thanks!

The ForEach is in place to handle the input if you use the -Computername parameter vs. piping the output into the function.

I am also confused by this, but have seen this example several times from Don, so I’m assuming it is purposeful. When accepting a parameter as “ValueFromPipeline”, why is it useful to declare it as a string array “[string[]]$Computername”, vs simply string “[string]$Computername”, or untyped “$Computername”. By defining the input as a string array, but accepting it as pipeline input, I would intuitively think this function expected a pipeline of string arrays, whereas it only seems like a single array of strings is being passed, which is then treated as a pipeline of single-element arrays?

I would consider more of a better practice to define the object type that you are expecting, which is why I typically go with [string[]] (or similar) to state that I am allowing a collection of strings vs. just leaving it without a type declaration. The issue with not declaring a type on the parameter will become apparent if you are using the parameter vs. pipeline input as it will throw errors. Keep in mind that it doesn’t have to be a collection of strings, but could be another type such as [int[]] or something else.

Pingback: Event 2: My notes… | IT Pro PowerShell experience

Pingback: Scripting Games 2013: Event 2 ‘Favorite’ and ‘Not So Favorite’ | Learn Powershell | Achieve More

This is very Informative – learned and refreshed a lot of stuff.

Excellent Post!!! Thank You!

That was a great post! Thanks for taking the time to chase down all of those topics, and for writing it up so well.

Pingback: Tips on Implementing Pipeline Support | PowerShell.org