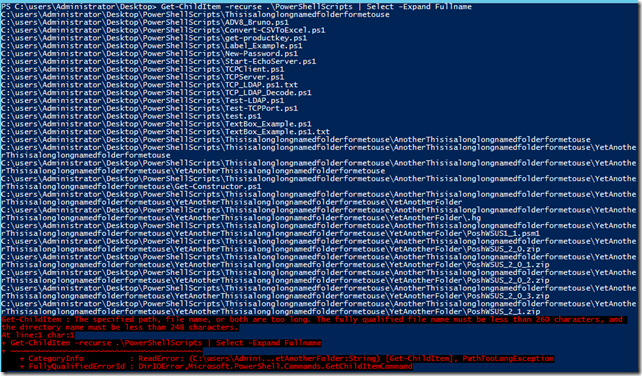

A common pain had by many System Administrators is when you are trying to recursively list all files and folders in a given path or even retrieve the total size of a folder. After waiting for a while in hopes of seeing the data you expect, you instead are greeted with the following message showing a failure to go any deeper in the folder structure.

Get-ChildItem -recurse .\PowerShellScripts | Select -Expand Fullname

The infamous PathTooLongException that occurs when you hit the 260 character limit on a fully qualified path. Seeing this message means heartburn because now we are unable to get a good idea on just how many files (and how big those files are) when performing the folder query.

A lot of different reasons for what causes this issue are available, such as users that are mapped to a folder deep in the folder structure using a drive letter and then continuing to build more folders and sub-folders underneath each other. I won’t go into the technical reason for this issue, but you can find one conversation that talks about it here. What I a going to do is show you how to get around this issue and see all of the folders and files as well as giving you a total size of a given folder.

So how do we accomplish this feat? The answer lies in a freely available (and already installed) piece of software called robocopy.exe. Because robocopy is not a windows specific utility, it does not adhere to the 260 character limit (unless you want to using the /256 switch) meaning that you can set it off and it will grab all of the files and folders based on the switches that you use for it.

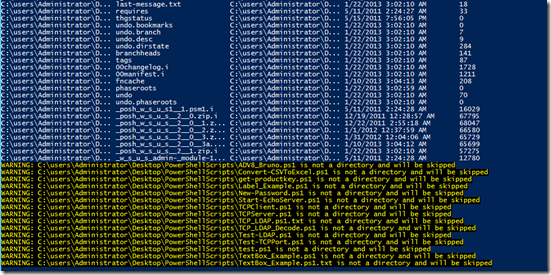

Using some switches in robocopy, we can list all of the files along with the size and last modified time as well as showing the total count and size of all files.

robocopy .\PowerShellScripts NULL /L /S /NJH /BYTES /FP /NC /NDL /XJ /TS /R:0 /W:0

As you can see, regardless of the total characters in the path, I can easily see all of the data I was hoping to see in my original query now available. So what do the switches do you ask? The table below will explain each switch.

|

/L |

List only – don’t copy, timestamp or delete any files. |

|

/S |

copy Subdirectories, but not empty ones. |

|

/NJH |

No Job Header. |

|

/BYTES |

Print sizes as bytes. |

|

/FP |

include Full Pathname of files in the output. |

|

/NC |

No Class – don’t log file classes. |

|

/NDL |

No Directory List – don’t log directory names. |

|

/TS |

include source file Time Stamps in the output. |

|

/R:0 |

number of Retries on failed copies: default 1 million. |

|

/W:0 |

Wait time between retries: default is 30 seconds. |

|

/XJ |

eXclude Junction points. (normally included by default) |

I use the /XJ so I do not get caught up in an endless spiral of junction points that eventually result in errors and false data. If running a scan against folders that might be using mount points, it would be a good idea to use Win32_Volume to get the paths to those mount points. Something like this would work and you can then use Caption as the source path.

$drives = Get-WMIObject -Class Win32_Volume -Filter "NOT Caption LIKE '\\\\%'" |

Select Caption, Label

There is also /MaxAge and /MinAge where you can specify n number of days to filter based on the LastWriteTime of a file.

This is great and all, but the output is just a collection of strings and not really a usable object that can be sorted or filtered with PowerShell. For this, I use regular expressions to parse out the size in bytes, lastwritetime and full path to the file. The end result is something like this:

$item = "PowerShellScripts"

$params = New-Object System.Collections.Arraylist

$params.AddRange(@("/L","/S","/NJH","/BYTES","/FP","/NC","/NDL","/TS","/XJ","/R:0","/W:0"))

$countPattern = "^\s{3}Files\s:\s+(?<Count>\d+).*"

$sizePattern = "^\s{3}Bytes\s:\s+(?<Size>\d+(?:\.?\d+)\s[a-z]?).*"

((robocopy $item NULL $params)) | ForEach {

If ($_ -match "(?<Size>\d+)\s(?<Date>\S+\s\S+)\s+(?<FullName>.*)") {

New-Object PSObject -Property @{

FullName = $matches.FullName

Size = $matches.Size

Date = [datetime]$matches.Date

}

} Else {

Write-Verbose ("{0}" -f $_)

}

}

Now we have something that works a lot better using PowerShell. I can take this output and do whatever I want with it. If I want to just get the size of the folder, I can either take this output and then use Measure-Object to get the sum of the Size property or use another regular expression to pull the data at the end of the robocopy job that displays the count and total size of the files.

To get this data using robocopy and pulling the data at the end of the job, I use something like this:

$item = "PowerShellScripts"

$params = New-Object System.Collections.Arraylist

$params.AddRange(@("/L","/S","/NJH","/BYTES","/FP","/NC","/NDL","/TS","/XJ","/R:0","/W:0"))

$countPattern = "^\s{3}Files\s:\s+(?<Count>\d+).*"

$sizePattern = "^\s{3}Bytes\s:\s+(?<Size>\d+(?:\.?\d+)).*"

$return = robocopy $item NULL $params

If ($return[-5] -match $countPattern) {

$Count = $matches.Count

}

If ($Count -gt 0) {

If ($return[-4] -match $sizePattern) {

$Size = $matches.Size

}

} Else {

$Size = 0

}

$object = New-Object PSObject -Property @{

FullName = $item

Count = [int]$Count

Size = ([math]::Round($Size,2))

}

$object.pstypenames.insert(0,'IO.Folder.Foldersize')

Write-Output $object

$Size=$Null

Not only was able to use robocopy to get a listing of all files regardless of the depth of the folder structure, but I am also able to get the total count of all files and the total size in bytes. Pretty handy to use when you run into issues with character limit issues when scanning folders.

This is all great and can be fit into any script that you need to run to scan folders. Obvious things missing are the capability to filter for specific extensions, files, etc… But I think the tradeoff of being able to bypass the character limitation is acceptable in my own opinion as I can split out the extensions and filter based on that.

As always, having the code snippets to perform the work is one thing, but being able to put this into a function is where something like this will really shine. so with that, here is my take on a function to accomplish this called Get-FolderItem.

You will notice that I do not specify a total count or total size of the files in this function. I gave it some thought and played with the idea of doing this but in the end I felt that a user would really be more interested in the files that are actually found and you could always save the output to a variable and use Measure-Object to get that information. If there are enough people asking for this to be added into the command, then I will definitely look into doing this. (Hint: If you use the –Verbose parameter, you can find this information for each folder scanned in the Verbose output!).

I’ll show various examples using my known “bad” folder to show how I can get all of the files from that folder and subfolders beneath it.

Get-FolderItem -Path .\PowerShellScripts | Format-Table

I only chose to use Format-Table at the end to make it easier to view the output. If you were outputting this to a CSV file or anything else, you must remove Format-Table.

Get-ChildItem .\PowerShellScripts | Get-FolderItem | Format-Table

Instead of searching from the PowerShell Scripts folder, I use Get-ChildItem and pipe that information into Get-FolderItem. Note that only directories will be scanned while files are ignored.

You can also specify a filter based on the LastWriteTime to find files older than a specified time. In this case, I will look for files older than a year (365 days).

Get-FolderItem -Path .\PowerShellScripts -MinAge 365 | Format-Table

Likewise, now I want only the files that are newer then 6 months (186 days)

Get-FolderItem -Path .\PowerShellScripts -MaxAge 186 | Format-Table

It is important to understand that MaxAge means everything after the given days while MinAge is everything before the days given. This is based off of robocopy’s own parameters filtering by days.

Lastly, let’s get the count and size of the files.

$files = Get-FolderItem -Path .\PowerShellScripts

$files | Measure-Object -Sum -Property Length |

Select Count,@{L='SizeMB';E={$_.Sum/1MB}}

So with that, you can now use this function to generate a report of all files on a server or file share, regardless of the size of characters in the full path. The script file is available for download at the link below. As always, I am always interested in hearing what you think of this article and the script!

Great Post, very useful, thanks.

use robocopy

Listing is fine, but what about transferring and maybe deleting them. Whats the workaround for that..So I hope this comment helps someone. i finally found a solution for this issue. I used GS Richcopy 360 which helped me solve this issue with ease. Go on and try it, it helped me. Hope it helps you too!

the comment above is spam

Any reason why this is not running in Powershell 4? No errors, I just get a blank line when I try to run it in powershell.

Using a command line to solve this problem for windows which is famous for its rich GUI, its not justified. I would rather suggest you to use GS Richcopy 360, it surely solves this problem, has simple to use GUI and many more features to try out. You can also try it to solve your problems.

the comment above is spam

Both of them are command line software and for newbie like me its really difficult to work on a command line just for simple file transfer. I am currently using GS Richcopy 360 to solve all my problems related to file transfer including long path name error and open, close file errors. A must have software. Try it!

the comment above is spam

When copying huge files I encountered errors like The files are used by system and cannot be copied. Some errors were like File path name too long, and other frustrating errors. After searching a lot on the internet I found that GS Richcopy 360 works best and provides solution to all my problems. Some of my favorite features include long path name support which is necessary when copying huge files, copying locked files, multi threaded file transfer for fast transfer speed, pre scheduled file transfer, email notification when task is done and many more.Try it its the best till now!

Tightening up the file list matches by anchoring to the start of line and expecting whitespace before the file size to avoid inadvertent matches with the summary information.

“^\s+(?\d+)\s(?\S+\s\S+)\s+(?.*)”

It looks like you are hacking something! LOL

But why to complicate things. When there are already software available in the market then why to do it yourself. After searching a lot and trying many software I found that GS Richcopy works best among them. Some of my favorite features include long path name support which is really important when copying large files, 100% multi threaded file transfer (as the company claims, you can witness the boost in transfer speed yourself), pre scheduled file transfer, email notification when the transfer is done, NTFS support, and many more exciting features. Try it instead of reinventing the wheel!

the comment above is spam

Thanks for the script. It looks to be doing exactly what I want, if I can get it to run through all the files on my server. I’m dealing with an estimated 30 million files. Not surprisingly, trying to set the output to an object didn’t work (I let it run for 5 days). What I am surprised by is when only selected the fullname property and redirected output to a text file it did about 2.5 million files in half an hour and then ground to a halt and only did a few hundred thousand the hours after that. Any idea how I can capture that full path info for 30 million files I can work with?

Thank you for this. It has helped me really sort out a large problem.

Hello Boe,

First off, this script is awesome! I did have one question however, when I run it with…

. C:\scripts\get-folderitem.ps1

$Path = “C:\Test”

Get-ChildItem $Path | ?{ $_.PSIsContainer } | Get-FolderItem | Export-Csv “C:\Test Logs\test.csv” -notypeinformation

… I don’t get a list of files sitting in the C:\Test folder. I get every file in every subfolder of the C:\Test folder (i.e. C:\Test\Exampe\Test123.txt” but nothing in the actual C:\Test directory itself (i.e. C:\Test\Example.txt).

I don’t know if this helps, but I’ve noticed that when I don’t include the $_.PSIsContainer portion I get this “Example.txt is not a directory and will be skipped.” Any idea on how to get it to not only return results in all of the subfolders as it already is… but also in the root that the script is running on itself?

Again, awesome script and many many thanks.

Long Path Fixer presents you with a simple list of files and folders in the current directory (including “hidden” files and folders). You can drag and drop files or folders onto it and it will navigate directly to path of whatever you dropped.

Pingback: Get Folder Size with Powershell & Robocopy « Yuriweb's Weblog

I read this post about a year ago and have since started using Robocopy as my go-to way to list directory information and its been great… However i just recently ran into a strange issue. I work in an office environment and i am preparing for some big changes. One thing i wanted to take care of is files and folders with a path that is too long. I wrote a script that gathers the path information with Robocopy and then checks the length of each path and reports it if its beyond the bounds i set. All good so far. I then decided to modify the script to do some basic trimming on the files, like removing double spaces and abbreviating commonly used words. What i discovered was that if there was an oddball character in the path, Robocopy would not correctly report the character. one example i can site from this experience is the copyright symbol ©. A user has managed to create a folder with © in the name. First off I’m surprised Microsoft allows this. secondly Robocopy simply reports it as a lower case c. this is a problem when i go to use the Robocopy output later to modify anything under that folder. i get a “Path not found” error. not to mention the fact that i would like to now get a report on non standard characters being used in file and folder names! Users always come up with the strangest things! Anyway, i love your posts, keep ’em coming!

Hello,

Can you please send the script that checks the files and folders names that are longer then 256 charterers please ? This will save my life. dgenchev@mail.bg

Hi AJ,

Did you solve this issue?

I’ve also run into this issue when trying to take the output of this script.

Francophone users have put é in the path names, which gets rewritten by this script to ‘?’ which obviously breaks functionality.

use /unilog:file.txt (not /log:file.txt) [in your robocopy copy]

It is excellent, worked perfect for me. Is there a way to get the Owner or last modified by of the file in your script?

You can use a custom calculated property for owner:

get-folderitem $path |

Select-Object *,@{name=’owner’;expression={

(get-acl $_.FullName).owner}}

Thank you for the script! The 255 character limit has proven to be a bear lately for me so this is great! One thing – I only need a list of the Directory paths Is there a modification to get this to just return a directory structure and not a list of files? I can work some excel magic on the file list csv but to skip that step(s) and just have a script that can give me a directory list (regardless of character length) would be amazing.

Thanks in advance!

Richard

Pingback: Another Way to List Files and Folders Beyond 260 Characters Using Pinvoke and PowerShell | Learn Powershell | Achieve More

Add “/B” to the list of parameters to work around file permission issues – if you are an administrator, /B allows ROBOCOPY to backup (read) and restore (write) files to a file system by making use of the Backup API.

Amazing, and exactly what I needed. I took the Robocopy line and adapted it into my own script (which seeks out files that were hit with a Crypto Ransomware virus, and replaces them with a good restored copy), and it allowed me to use the output of Robocopy to identify the renamed files that needed to be restored. Absolute life-safer. 260 character limit.. pfft… next you’ll be saying that we don’t need any more than 640kb of memory!

I wrote something to parse the robocopy summary report and report folder sizes, inspired by this blog post. I put it here: http://www.powershelladmin.com/wiki/Get_Folder_Size_with_PowerShell,_Blazingly_Fast

Great script & tutorial Boe, saving a lot of my time 🙂

But somehow, it doesn’t work when I tried to scan some DFS folders. I want to modify the script, so it can follow mount point of DFS folder. Maybe you can give some advice, Boe.

Thank you.

Hi, I’m facing an error when executing your second script, which is:

$item = “PowerShellScripts”

$params = New-Object System.Collections.Arraylist

$params.AddRange(@(“/L”,”/S”,”/NJH”,”/BYTES”,”/FP”,”/NC”,”/NDL”,”/TS”,”/XJ”,”/R:0″,”/W:0″))

$countPattern = “^\s{3}Files\s:\s+(?\d+).*”

$sizePattern = “^\s{3}Bytes\s:\s+(?\d+(?:\.?\d+)\s[a-z]?).*”

((robocopy $item NULL $params)) | ForEach {

If ($_ -match “(?\d+)\s(?\S+\s\S+)\s+(?.*)”) {

New-Object PSObject -Property @{

FullName = $matches.FullName

Size = $matches.Size

Date = [datetime]$matches.Date

}

} Else {

Write-Verbose (“{0}” -f $_)

}

}

The error encountered is :” Impossible to convert value “11:54:35 ERROR” to type “System.Date.Time” Error: “String was not recognized as a valid DateTime”” I shorten the message, which was in french originally.

Have you any idea why it went wrong?

Best regards,

Jean

Pingback: Open a Socket! » Blog Archive » Powershell function to count files using Robocopy

Hi Boe,

Great stuff! Had a need for something very similar to this, borrowed your function and modified to pull folders only. No idea what to name it, picked Get-FolderEntry. http://gallery.technet.microsoft.com/scriptcenter/Get-FolderEntry-List-all-bce0ff43

Saved a bunch of time, thanks!

rcm

Aweseome script! It’s a great workaround for the issue with Get-ChildItem detailed here: http://blogs.msdn.com/b/powershell/archive/2009/11/04/why-is-get-childitem-so-slow.aspx

As I’m having to deal with folders containing > 750,000 files, Get-ChildItem’s exponentially increasing file enumeration time makes it nearly impossible to use. Robocopy on the other had works blazing fast. And this script neatly solves the problem of getting PowerShell to process Robocopy’s output.

As a side note, you might want to think about adding a switch to exclude files residing in subfolders to your script like so:

Params(…

[parameter()]

[switch]$DoNotRecurse

)

Begin {

$params = New-Object System.Collections.Arraylist

…

If ($PSBoundParameters[‘DoNotRecurse’]) {

$params.Add(“/lev:1”) | Out-Null

}

This is useful for situations like the one I’m facing, where I need to move files created in a given year to a subfolder as part of an archival process. Naturally, I don’t want to process previously archived files. Adding that switch to your script allowed me to prevent robocopy from doing just that.

This script is great. Any idea on how I can use this to compare directories to see if they are the same? I have another script that uses Robocopy to copy files for backup purposes. Although my script checks the error code of Robocopy for problems, I wanted to use your script to double-check and make sure that the source and destination directory contents are really the same. I tried using your script with compare-object, but the output doesn’t look right. Any ideas? I was thinking of just looping through the files and comparing file names, but that doesn’t seem very efficient.

I’m parsing a huge (~6 million) file structure to find files or folders with specific strings in them, and have discovered a minor addition to speed things up and avoid the “{0} is not a directory and will be skipped” error when using GetChildItem:

Get-ChildItem -recurse | ?{ $_.PSIsContainer } | Get-FolderItem | Export-Csv “C:\test.csv”

The pipe to ?{ $_.PSIsContainer } sends only directories to Get-FolderItem, and in my testing gives byte for bite identical csv outputs to the version without, but minus the error messages, and in well under half the time. Hope this might help someone else in the future.

Thanks for the script, it made my life significantly easier, and saved me the worry about what exactly powershell was missing otherwise.

Nice share. For me with Path too long error I use “Long Path Tool” to get fast and easy fix .

Hi, nice one!

i thing i ve reached to the same goal with, something different.

i used this : http://alphafs.codeplex.com/ inside a recursive function. Works well, but i think, it’s faster with a robocopy. (if you are interested i can give you an example)

One problem with your script, file with no extension, and dots in the path. for example:

\\filer\azeaze\big_directory.1\file

you regular expression “-replace ‘.*\.(.*)’,’$1′” doesnt work well …

but very nice work !

(srry for my english … 😉

Pingback: Get-RobTree.ps1 – Suchen ohne Fehler | Blog

Your script is such a blessing, one question. How would i go about changing the last write to the last accessed time? We are inventorying our folder structure and the request is for accessed rather than write as some people use files for reference or such. THank you again.

Unfortunately, this is a limitation of robocopy to only let you search based on the LastWritetime of a file. There is nothing I can do about this to let you filter based off of LastAccessTime in this function.

Thank you, is it possible to display last access date then that could dump to csv? Thank you

Not with this method. Robocopy will only show the LastWritetime when used.

Pingback: Split-Path Performance in PowerShell | Learn Powershell | Achieve More

I have done a TechNet Wiki Article on that topic. There is shown some other solution around the

path too long exception. (did you not found this on Internet search?)

Include a full blown (.NET) PowerShell Robocopy clone! So you don´t have to parse Text like the shown examples!

http://social.technet.microsoft.com/wiki/contents/articles/12179.net-powershell-path-too-long-exception-and-a-net-powershell-robocopy-clone.aspx

greets

Peter Kriegel

http://www.admin-source.de

Thanks for the comment and links.

Those are some great links with excellent information in them. I admit that I didn’t do a lot of searching prior to writing my script as I was short on time.

Unfortunately, some of those are 3rd party utilities (such as the robocopy clone) which sometimes cannot be used by everyone due to work requirements and the other examples do not quite meet the requirements of what I was setting out to do which was to include the bytes of each file. The text parsing really wasn’t that difficult because I had already had the switches required in robocopy to only give me what I needed to create the object without much loss to performance.

Nicely done! I like creative solutions like this.

Thanks!